The AVI SSVEP Dataset, is a free dataset (for non-commercial use) containing EEG measurements from healthy subjects being exposed to flickering targets in order to trigger SSVEP responses. The dataset was produced as a part of a master thesis. All data were recorded using three electrodes (Oz, Fpz, Pz) during winter 2012-2013. The purpose of this dataset is to help non- commercial projects get started with SSVEP responses using real data.

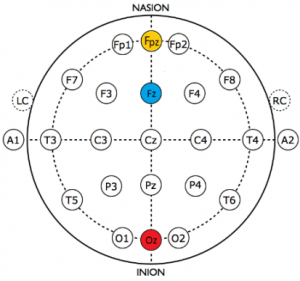

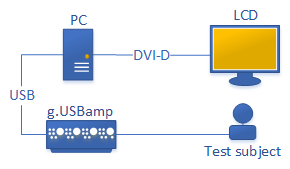

Figure 1 shows the setup used for all experiments. The signal electrode is placed at Oz while reference is at Fz and ground at Fpz using the standard 10-20 system for electrode placement. Reference and ground can be set to other positions such as earlobes and mastoids. The right figure shows the hardware setup, where the LCD monitor, BenQ XL2420T, has a refresh rate of 120 Hz. The only processing applied on the data is an analog notch filter at the mains frequency (50Hz).

Figure 1: Experimental setup

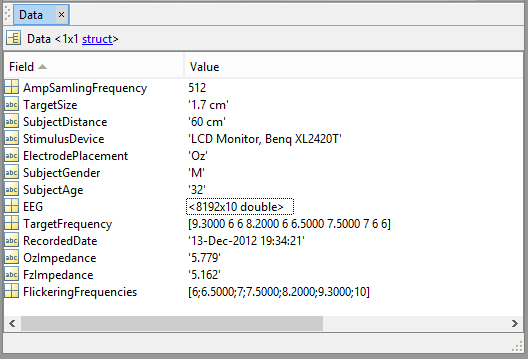

Figure 1: Experimental setupThe data are stored as .MAT files, typically opened using Matlab or Octave. Alternatively, you can download the raw EEG and target frequencies are provided in .DAT files as comma-separated values, which can be opened by any text editor. The difference between the .MAT and the .CSV files are that .MAT files contain more meta-data about the setup.

Hi,

Thanks a lot for the data and the really quick response! 🙂

Cheers!

Raunaq

Hi Adnan,

If it’s not too much trouble, I’d also really appreciate it if you could send me your code so that I can reproduce your results.

Thanks!

Raunaq

Hi Raunaq,

If you for example run a simple FFT on one column of data in the multitarget files, you should get a clear indication of where the peak is located. I am unfortunately unable to share my source code at present time due to pending patent cases in which the implementation is essential.

Best regards

Adnan

Hi Adnan,

I ran a simple FFT on the first column of the file “Sub1_2_multitarget.mat” (code here: http://pastebin.com/kzHEr0Xt)

and got the following result: http://i.imgur.com/AFjjRWG.jpg

In this fourier spectrum there is no good indication of where the peak is located. There should be a peak for frequency 9.3. Is there any preprocessing that is needed to get a clear peak in the spectrum?

Best regards

Andreas

Hi Andreas,

Looking at your output, it appears that FFT is not applied correctly. Also, you should look at a more narrow spectrum since you already know the range. I’ve added some sample code below that should help you.

Hint: Sometimes it also helps to look at second and third harmonics 🙂

signal = Data.EEG(:,1);

fs = Data.AmpSamlingFrequency;

L = length(signal);

y = signal;

NFFT = floor(2^nextpow2(L));

Y = fft(y,NFFT)/L;

f = fs/2*linspace(0,1,NFFT/2+1);

y_y = 2*abs(Y(1:NFFT/2+1));

x = f;

plot(x,y_y);

xlim([0 50]);

Thank you! 🙂 With your code the spectrum looks correct with clear peaks.

I am unable to download the dataset from given links. can you provided some other way?

ASAP! please! or send me on email below.

Hello Umair,

I just checked from two different machines in different countries, and links work fine. Please try again

http://setzner.com/dls/AVI_SSVEP_Dataset_MAT.zip

http://setzner.com/dls/AVI_SSVEP_Dataset_CSV.zip

Hi Adnan

I just want to ask what are the benefits of having the frequency of the stimulus being so close to each other. Wouldn’t that result in overlapping of the peaks at stimulus frequency values that are close to each other?

Thanks

Chia-Hung

Hi Chia-Hung,

I’d say there are three things to keep in mind regarding the picking of frequencies

Perception

First thing to keep in mind is that it is easier to distinguish between, for example, [6.0 Hz vs. 6.5 Hz] than [10 Hz vs. 11 Hz].By looking at a stimulus, in the first case, you can actually see the difference, while with the second example, it’s much harder to see which one is faster.

Harmonics

You also need to keep in mind that there can be useful information in the harmonics. So instead of only looking at 6Hz, you might also look at 12Hz. So then you can’t use 12Hz as a stimulus because they’ll overlap. So you need to make sure that fundamental frequency does not overlap with harmonics of other stimuli.

Stimulus accuracy

The better your stimulus performs, the more accurate and narrow your peaks will be. If you can obtain perfect frequencies, then you can have a resolution of 0.1 Hz and still find see difference in SSVEP response. So whether you keep frequencies close depends on how narrow you can get your spikes around responses. Keep in mind that the PCs have restrictions here due to refresh rate and tasks running in background, so hardware stimulus can be an advantage.

Hope this helps 🙂

Hi,

I have selected 15,20,30,40 Hz frequency for stimulation panel.

But can it overlapp? as you said:

“So instead of only looking at 6Hz, you might also look at 12Hz. So then you can’t use 12Hz as a stimulus because they’ll overlap.”

Hi Uzma,

15Hz and 30Hz would overlap yes. Look at the figure below which is FFT for 2 seconds of EEG where person is stimulated with 9.3Hz. Notice it’s hard to see difference in the low frequencies, while 18.6 (9.3 Hz * 2) has a huge spike 🙂

Thanks ,

can you please guide me more ? i m undergraduate student.

my project name is ssvep based device control using fpga.

i have downloaded above data and for analysing it i have done the following steps.

Apply LDA algorithm

FFT response

power spectral density

what should be my next step? if still working on recorded data….which signals should use for amplification,filteration etc?

Check my paper on the topic and see if it helps.

If you’ve implemented all those things, it sounds like you’re close to done though. It sounds like all you’re missing:

– Randomly pick out some columns from

Data.EEGin files as training set for your LDA.– Randomly pick some columns from

Data.EEGas test set for your LDA.To make it a bit harder, you should make new test and training files which contain columns for different subjects. Maybe use 70% of the columns for training and 30% for test.

you dont know but you saved my life, i was so frustrated that i was not able to detect the f* frequency by any available theory i have learned in my life. i had almost given-up on my project but today i applied my code on your data and it worked as magic.

thanks thanks thanks thanks thanks thanks thanks thanks thanks thanks thanks thanks thanks ….thanks^(infinity) 🙂

Great! I’m happy it works for you 🙂 I’ve been in the same situation, and I know that it can be a time sink not knowing if it’s the method or the signal that something is wrong with.

Best of luck with your project 🙂

Hi,

I m also in trouble condition,but i can not give up my project

can u please tell me What steps have you applied on this recorded data to analyse it ?

Hi Uzma,

All steps are described in my paper on the topic

Hi Adnan,

Can you help in how can I build an SSVEP stimulus (screen with flashing lights) in Matlab?

Thanks,

Amjad

Hi Amjad,

I used C# to generate the flickering, but it is possible with in MATLAB as well, although you need certain tools for it. There are two tools for matlab :

http://www.vislab.ucl.ac.uk/cogent_graphics.php

Hi Adnan,

firstly thanks for data

I applied fft for eeg data that answer to 10 hz stimulus. in spectrum I can see 10 hz and 20 hz compenets clearly. Than I calculate periodogram with matlab code ( periodogram(x,[],’onesided’,NFFT/2,fs) ) But I can’t see any peek about 10 hz.

what is the problem?

Hi Talha,

Try this – looks good to me:

periodogram(signal,rectwin(L),L,fs)thanks this is good

Hi Adnan,

how method you apply for obtain for 6.5 hz, 7 hz with using 120 hz monitor.

Hi Talha,

I switch colors based on time – not frames. This results in a few color switches along the way being incorrect – if it was more precise, the EEG response would be even better.

Even if you go for 6 Hz or 10 Hz, you’ll still get errors during switches because the computer is running an operating system and other processes in the background which at times will cause a delay.

excuse me I can’t understand exactly. What does it mean “I switch colors based on time – not frames. This results in a few color switches along the way being incorrect – if it was more precise, the EEG response would be even better.”

what is the difference between time and frame. a lot of paper say “because of refresh rate, limited frequencies available when you use monitor”. Some groups work for increasing available frequencies with different method.

if you achieve frequencies you want with using time, why these groups working ?

Time instead of frames for changing colors:

Time:

If you change colors based on time, you count milliseconds. If you want to produce 6Hz, you change color (black to white or white to black) every 83.3 ms.

Frames:

If you use frames and your monitor updates at 60Hz (frames per second) then you change color (black to white or white to black9 every 10 frames. The requirement is that you have full control over the monitor however.

Why some use time and some use frames:

Many groups use PsychToolbox or Cogent, which takes full control over graphics card and allow you to control and check frames very accurately, but you lose ability to do anything else on the monitor at the same time.

Also, there is a great difference in how monitors work and what refresh rate on monitors means. A CRT monitor updates the whole screen in every frame so you are certain that everything is refreshed. Refresh rate on LCD monitors is different though. The refresh rate indicates how fast the whole screen CAN be updated. But instead of updating the whole screen in every frame, an LCD monitor updates only the part that it knows has changed.

So the reason why many research groups use frames and refresh rates is more related to tradition with old CRT monitors than to performance. Both approaches work, but which one is best depends on the technology that you use.

You have explain clearly, but some papers contradict your explanation. in “Multiple Frequencies Sequential Coding for SSVEP-Based Brain-Computer Interface” author says “Owing to both the limitation of refresh rate of liquid crystal display (LCD) or cathode ray tube (CRT) monitor”.

In spite of using lcd monitor some papers use method for increasing available frequencies (A new dual-frequency stimulation method to increase the number of visual stimuli for multi-class SSVEP-based brain–computer interface).

What’s your opinion on this topic?

“Limitations”:

Many assume that because you have a 60 Hz monitor, you can ALWAYS produce accurate 6 Hz flickering – this is a wrong assumption on a PC because the PC is also busy doing many other things at the same time. You can produce both 6 Hz and … 7.2 Hz (random number as an example) – BUT 6 Hz is easier to produce for the monitor and will be more accurate. So to detect 7.2 Hz, you’ll have to sample for longer time than for the 6 Hz.

On the other hand, if you work with a PC, 7.2 Hz

will be easier to produce than 30 Hz on a 60 Hz monitor, simply because the PC will be very busy. So refresh rate is not everything.

Also, notice in the dataset that you downloaded from my site, that you CAN see those frequencies which others groups say are not possible to produce – so the recordings prove clearly that it’s possible 🙂

dual-frequency SSVEP

Thanks for sharing that paper – it’s well written and very interesting. It looks like it’s easier for a person to focus when the presented pattern has more changes. From my own experience, I’d say it makes sense because it’s hard for a person to get focus once they switch target. It’s harder to implement, but based on their results, it seems worth doing – but start with one frequency and then modify that 🙂

I have question about classification.

I have applied fft for eeg data that answer to 10 hz stimulus (I have use 30 second data). In spectrum I can see 10 hz and 20 hz compenets clearly. In this way classification is very easy. But I think classification must not be very easy. I lot of groups work for increasing classification performance.

which steps are you follow in classification?

what is the difference between online classification and offline classification?

Difficulty depends on which performance you want to get. If you take full 30 seconds, the classification should be very easy – just take the highest peak and you’re done. But what you want to do is to detect the frequency as fast as possible. If you can find it for 1-2 seconds, that’s very good – and then it of course becomes harder because data won’t be nearly as pretty 🙂 You can see what I do in my paper on the topic

Offline:

Let subjects sit and look at the flickering targets or some other BCI while you record their EEG – at this point, the person cant control the BCI. Then you sit somewhere and create a classification algorithm that can recognize SSVEP.

Online:

You bring same or other subjects in and let them control the BCI. While you’re reading EEG activity, the classifier lets the subjects control the BCI.

So by doing offline tests first, you can fine-tune your algorithms as much as you want before you bring in subjects for real testing.

firstly thanks for your paper. I have read and understood own question

Some papers denote your classification accuracy good in offline but in online your accuracy decrease. If the difference between online offline classification only bci control and they use same algorithm why accuracy decrease?

In offline analysis you fit a classifier to EEG data that is already recorded (offline dataset). You fit and adjust until you get the highest possible accuracy.

In online analysis you work new EEG data, but you apply a classifier that was made to fit another set of EEG data (offline dataset).

It’s similar to learning to ride a bike. You train with a 1-gear bike, 3-gears bike and maybe a bmx. But then suddenly someone asks you to ride a race bike – you’ll be able to do it because you trained on different bikes, although your performance will be worse than if you had practiced on a race bike from start.

Hi Adnan

I computed autocorrelation of 2 second data than I applied fft on this .

I obtained better result .

But why autocorrelation data give better result?

Hey,

When you understand how autocorrelation works, you should be able to figure out the answer yourself 🙂

Hello Adnan,

Is there any difference between “auto-correlating the EEG signal, then taking FFT” and ” taking FFT of the signal and then calculate absolute square of it” to estimate the PSD? In theory they must be same but since this is a finite signal, there should be a small difference. Can you say autocorrelation plus FFT is better than FFT plus absolute square?

Thanks

Hi Ali,

They are not the same in this case, as autocorrelation modifies the signal before continuing to FFT. In the second approach you dont change the data at all. Autocorrelation serves as noise-reduction here.

i want to ask that in ur recorded data ,

and there is repeatation of same frequency in 3 to 4 columns

for e,g 10 Hz in column 1,2,3

and EEGdata in 15360 rows.. is it no. of repeatation of person test?

kindly explain the file “sub1_singletarget”

Thanks in advance.

In the file you mention:

– Each file is one subject (sub1 = subject 1).

– Each column is one target (27 columns = 27 targets)

– 15360 / 512 (sample rate) = 30 sec EEG per trial

– Columns represent trials, rows are EEG readings.

– Yes, the person looks at the same target repeatedly.

thanks

Hi Adnan,

In the description, you said only notch filter is applied to the data at 50 Hz. I guess an analog bandpass filter is applied to the data as well. Then I saw your conference paper related to this dataset. You mentioned about the bandpass filter in the experiment setup. Is it exactly the same dataset with the paper?

One more question. After how many seconds/milliseconds the SSVEP response appear on EEG? Have you ever seen a well accepted (neurological) reference paper about it? Because it will limit your detection time.

Thanks

Hi Ali,

Q1: Paper dataset and website dataset:

The conference paper and this site use very different datasets. The conference paper deals with realtime data where the user gets feedback while selecting. The goal there was to select as fast as possible.

The purpose of this dataset was more to see what SSVEP actually looks like and how it behaves. That’s why only a 50Hz filter is used here.

Q2: How fast can SSVEP be detected?

It is not as clear as with P300, which only requires that the person is “surprised”. For SSVEP, there are many more factors. flickering needs to be precise, vision impairment, light settings etc. So I haven’t seen any papers on it, and I think it’ll take a while before we see a good paper on it 🙂

Hello, I really appreciate, that you share your data about SSVEP. Im researching this method and algorithms for best classification right now. There is high chance that I will be able to use wireless (no 50Hz noise) EEG device, so I might share my data here in the future.

If I have some questions for you, and you will have time and willingness, where is best place to ask you questions? here? or perhaps email?

First question (here) for a start, if I understand this correctly, for a 10Hz peak you switch colors ten times a second, shouldnt it be 20times, since 1Hz would be from white to black and from that black to white in 1sec? (like period in cosine 1=>0=>-1=>0 where 1 and -1 would be white and black) I know the question might be written badly, but I hope you understand my question. I made application in Java for stimuling SSVEP but havent tested it yet, and I wont be able for a while.

Second question: Do you think its good idea to take a part of FFT for classification? for example you use 8Hz, 12Hz and 16Hz frequencies, and you put 3 different parts of original FFT:

1. FFT only 6-10Hz for 8Hz

2. FFT only 10-14Hz for 12Hz

3. FFT only 14-18Hz for 16Hz

Do you think is it good idea to filter the original signal (before FFT) with passband filter at specific frequencies, for example for 8Hz it would be ofcourse at least -3dB 6Hz and lower + 10Hz and more?

Even if you dont answer anything, thanks for very useful data and informations.

Hey there. I’m glad you like it and hope it’s useful to you. I prefer the questions here, because many people have the same issues and troubles when working with this topic – It is easier to refer to questions/answers in this page than revisit old emails or rewrite answers. 🙂

Blinking frequency

Yes, it’s exactly like a period in cosine. Frequency represents how long it takes the sequence to finish (showing black AND white), and not the time from one color to the next. So for 10 Hz, white and black colors will each occur 10 times.

Segmenting FFT into smaller parts

Most groups working with SSVEP use only parts of FFT for their classification, as you suggest – although with even narrower windows. When you read papers, you’ll always see the full spectrum because it’s important to visualize to the reader that how dominant a frequency is compared to others.

In terms of your example, keep in mind that 8 Hz and 16 Hz can be difficult to distinguish because the latter is a second harmonic of the first, meaning that you’ll see a peak in 16 Hz even if you’re exposing the person to 8 Hz. So, be careful which frequencies you choose.

Pre-processing EEG

Real signals always need some pre-processing to remove artifacts like baseline wandering. If you want 6 Hz to be present in the signal, your bandpass filter should start some time before 6 Hz – the lower the order of filter, the earlier you need to stop. For SSVEP I would recommend 0.5 Hz to twice/thrice the speed of your highest frequency.

Hi again. Looks like best place for posting questions is here, thought you dont answer question that fast here, but well I was wrong.

Have next questions for you:

1. Why did you choose these target frequencies you actually picked (6; 6.5; 7; 7.5; 8.2; 9.3; 10; 12 – for single only)? I have read 2-3 articles where they shown that best target frequency (highest amplitude value) is about 15 Hz. In 1st article there was 15 > 12 > 10 > 17 > 5, in 2nd article there was 15 > 6 > 5, 7, 8. Looks like 15 Hz is like key frequency, is there a reason you didnt try that 15 Hz? and why didnt you go higher than 12?

Assuming the precision with which the targets flicker is equally accurate, the “best” frequency will be subject specific. This shouldn’t matter though, because if the flickering is accurate and the person’s vision is fine, then any frequency below ~20Hz is detectable. See

Development of an SSVEP-based BCI spelling system adopting a QWERTY-style LED keyboard

I choose my frequencies primarily based on their harmonics. Since I already had 7.5 Hz, I can’t use 15Hz. And I’d rather have 7.5Hz than 15Hz because my software is more reliable when flickering with smaller frequencies.

Hello Adnan,

Could you tell me how did you manage with FA (false activation) I see in your dataset there is always target frequency, so how did you make system with relatively low FA ratio since there is no not-looking-at-stimuli set? Even with it I have no idea how to handle FA, only idea I had which is bad imo, is threshold, but I noticed some people had big amplitude some very low, so threshold wont really work as one system for many subjects…

Btw. If you didnt notice in your dataset, there is typo in variable called AmpSam(p)lingFrequency (in multi sets there is lack of ‘p’ letter).

Hi again,

Thresholds definitely work wonders but there are no rules for it – I also used something like thresholds in mine because it is the easiest to deal with.

Another thing you can do, is to find out which alpha activity the user generally has and avoid having that frequency in your system. It’s different for everyone though. I for example have high activity around 11 Hz when I’m not looking at any targets. Another of my subjects had high activity around 9 Hz.

Thanks for the typo issue. I’ll fix it soon 🙂

Hi Adnan

Thanks your database. I am trying signal processing methods used in SSVEP paper.

I seperate eeg data 2 second segments, and 5 second segments. I make a basic classification with fft. When I use 2 second I achieve %47 accuracies but When I use 5 second I achieve %27 accuracies.

Why accuracy drop when I use long segment. A lot of paper say when data length short accuracy drop

Hi Talha,

Accuracy should increase significantly if you increase the segment size by that much. I think there’s something wrong with your approach. My advice is:

For each classification

– Display 2 seconds fft.

– Display 5 seconds fft.

– Display which class and frequency it should have selected.

– Display which class and frequency was selected.

Spend some time analyzing where it goes wrong by looking at the two FFTs. The 5 second FFT should look very similar to 2 second FFT, but with peaks being higher.

Hello Talha,

Im sure longer segments should result in higher target frequency amplitude, tho I noticed similiar thing with this dataset. I tried different start time and different window time (for example 1, 2, 3, 5, 10, max seconds) and sometimes 2 sec were better than 5 sec, but when I moved start time, 5 sec were better than 2 sec. So I think its kinda random, subject might have moved, or lose some focus on the target etc. Dont take this as a fact but as a clue. Im planning to check how good is the target frequency amplitude compared to others, for different segment times soon, should be done before 11-05-2015 but cant promise exact date. If you are interested in results I can share here.

I tried again with different time segment and long segments give higher accuracy. Last time I made mistake.

I cant understand exactly with “Im planning to check how good is the target frequency amplitude compared to others, for different segment times” what do you want to do?

when We design stimuli with c#, must we use timer?

if we use timer, are stimulus work accurately

What I meant by saying “Im planning to check how good is the target frequency amplitude compared to others, for different segment times” is to make some statistics, check power density of FFT for different time windows for example window times of: 1, 2, 3, 5, 10 seconds, on whole AVI Dataset.

I think that it doesnt really matter much what language you use on PC.

I have read that best is to use synchronization with monitor refresh rate (if working on LCD / CRT screens, especially under 100Hz refresh rate), but it is possible to use timer. Tho background processes or low PC performance can delay your flickering frequency and because of that you will get slower frequency than intended. Refresh rate might also delay ur frequencies, because even tho PC will change color, monitor will not yet refresh the color (the color subject’s eye percieve).

I hope everything I wrote is understable and correct (my english is not that good).

Hello,

I think I reached lowest branch in last topic / post, cant reply there anymore.

“Another thing you can do, is to find out which alpha activity the user generally has and avoid having that frequency in your system. It’s different for everyone though.” that is very interesting, did you came up with it by yourself or did you read it somewhere (if read, please link if you know where it was). Might be useful for fast traning before using the system. I noticed there is one subject in your dataset where he had like always big amplitude at ~5-7Hz.

For now I got 10 features, and Im using basic thresholding (at least 6 of them must win with others to classify). Btw. did you use maybe CCA (canonical correlation) I heard its best feature for SSVEP, but its average for me atm. Dont know if Im doing something wrong or not, or maybe CCA works good with NN and not with thresholding.

One more thing, do you know why sometimes 2nd harmonic has bigger amplitude than 1st?

Hey,

No worries. Each post allows up to 4 replies yea, otherwise text becomes too narrow 🙂

I can’t take credit for that one. I learned about it from two independent neuroscientists who explained to me how that could be a source of error in classifications.

I didn’t use CCA. I wanted to do it as basic as possible. I’ve asked around, but it’s unclear why second or sometimes even third harmonics are sometimes higher than the original frequency.

Hello again,

I see you had flickering frequencies windows in positions like: 6 – 6.5 – 7 – 7.5 – 8.2 – 9.3 Dont you think it could be better to place them like: 7.5 – 6 – 8.2 – 6.5 – 9.3 – 7 (2rows, 3columns) to have bigger frequency differences next to each other, or maybe it doesnt really matter?

Can you tell me what features did u use? only FFT amplitude?

Hey,

It depends whether or not your subject can perceive that there are other flickering objects near the one that they’re focusing on. If objects are far apart, ordering doesn’t matter, but if they’re very close, it will have an effect.

The signal processing and feature parts are classified, so I can’t help you with that, sorry.

Hi Adnan

I have make visual stimulus with c#. I have used timer.

But stimulus doesn’t work accurately. what can I do

Hi Talha,

Making a stimulus is a bit tricky. To make flickering accurate, you need to write code efficiently so everything runs fast. My advice is to put your code on c# programming websites and ask for advice on how to optimize it.

I can suggest you to try Free SSVEP Stimuli software from:

http://openvibe.inria.fr/steady-state-visual-evoked-potentials/

If this wont work better, maybe there is something else you are doing wrong.

thanks for sharing this – I’m considering publishing a flexible stimulus as well, and wasn’t aware of this project before 🙂

Hi Arthur

I tried to use openvibe. I work with prepared SSVEP example but I can’t design own stimulus. have you ever design visual stimulus with openvibe

Hi Adnan,

Greetings,

My thesis and research mostly involve in brain

computer interface. actually I am going to

do my research with SSVEP method and focusing on

stimuli properties and enhance performance and ITR.

the problem is that I cannot get the EEG data in SSVEPs approaches.

because the device and tools is expensive for me and

university, they do not afford it then I cannot test my

hypothesis. could you please guide me through this way?

how can I access some predefined SSVEPs dataset?

I will appreciate your assist and consideration. I am looking forward to your

reply.

Hi Behnaz,

Predefined datasets:

I understand. SSVEP datasets are very rare because the quality of the data depends highly on equipment, subjects and environment setup (stimulus, noise from surroundings, electrode placement etc.). Unfortunately, I haven’t found any decent datasets while browsing the net – and that’s also the reason why I uploaded my data that you can download from this page.

Keep in mind though that my data only records EEG from one channel, and you are therefore limited to one-dimensional signal processing.

Equipment:

Doing BCI research can indeed become expensive, but I think that equipment is essential. Here are some amplifiers that your university should be able to afford, and I’m sure that future projects could also benefit from them:

– Open Bci ~800$

– Emotiv EPOC ~2500$

– GL Bioradio ~3000$

Best regards,

Adnan

Hello,

I also wanted some SSVEP dataset, but only one I found was Adnan’s one (thank God for it) and this ( http://www.bakardjian.com/work/ssvep_data_Bakardjian.html ). Tho this dataset from the link has only 3 different frequencies, I didnt try it because I use 2nd harmonics in my classification, and 14 Hz and 28 Hz are not allowing it.

I wanted to make my own dataset, with my university’s hardware, but many problems with hardware occured. Unfortunatelly I must say, I wont be able to provide another SSVEP dataset (on ~95%).

btw. Adnan, can I use image or two from your dataset documentation in my research paper? (ofcourse I will reference the source)

Thanks for the kind words. Of course – I’m glad there’s more useful stuff for you 🙂

Hi Adnan

Can you ever try to calculate phase values on stimulus frequency.

I calculate phases for 10 hz stimulated eeg. I waited phase values look like each other. But I obtain different phase values.

whats wrong?

Hi Tahla,

Depending on which method you use, you’ll always get phase values, even if there is no present SSVEP.

Assuming that every window that you’re analyzing actually has 10 Hz, then different phase values are still expected because it varies when the person’s brain elicits SSVEP. Sometimes it may take 100 color changes in the stimulus, other times, 130 etc.

Keep in mind that EEG is not nearly as noise-free and consistent as ECG where you’d always have same phase for a healthy person.

I used 10 hz eeg data in Sub1_singletarget. I seperated 2 second windows. Like this paper “Brain–Computer Interfaces Based on Visual Evoked Potentials” by YIJUN WANG, I applied fft each windows and calculate phase for 10 hz.

These are obtained phases

1. window phase -66 degree

2. window phase -83 degree

3. window phase -96 degree

phase -108 degree

phase -27 degree

phase -116 degree

phase -39 degree

phase -46 degree

phase -28 degree

phase -31 degree

phase -31 degree

phase -25 degree

phase -59 degree

phase -112 degree

phase -55 degree

Phase values are different. How can I use phases for classification when phase values not stabke like this?

In Brain–Computer Interfaces Based on Visual Evoked Potentials” by YIJUN WANG paper phases are stable.

I’m assuming that you’re referring to figure 3 in that paper. That figure illustrates a monitor, which would have 6 fields that are all blinking at 10Hz but shifted. The monitor will have constant shifting values, but the EEG certainly wont look nearly as nice – which you also can see in figure 4 where classes are more scattered.

The paper refers to a detailed description of the method in the paper Phase Coherent Detection of Steady-State Evoked Potentials: Experimental Results and Application to Brain-Computer Interfaces.My advice would be to try implementing it the way they did, and if you’re still stuck, write a mail to the authors.

Hi adnan.

I examined paper suggested by you. but I didn’t be able to understand. Could you explain paper or how we obtain phases and use these

Hey,

I don’t have experience with this method, so it would take me several days to implement and verify it.

I’m sorry, but I don’t have the time to do that – I strongly encourage you to contact the authors and ask them for sample code – or if you’re a student, ask your supervisors for help.

Hi Adnan, I’m student and I have a SSVEP dataset and then compute the FFT of this signal but I don’t know how do classify this info. If you could help me.

Hi – That’s a very broad question. The short answer is, that you can just find the peak in FFT to find the dominant frequency 🙂 Of course, this is a very naive approach which wont get you far. Luckily for you, there are thousands of scientific papers that describe different classifiers for SSVEP. So use google scholar, read and understand what’s out there, and you should get plenty of ideas.

Hi Adnan

I have obtain Emotiv epoch and I have collected SSVEP data. I have used red led, signal generator. During 30 second subject have attended stimulus from 50 cm distance . I have used 6, 6.5 ,7…..40,42,44 Hz.

It is strange that when I examine datas with psd, peaks seems very clearly even 40, 42, 44 Hz.

When I examine 6 Hz 2. 3. 4. 5. harmonics peaks seems very clearly.

In this case classification very easy.

Have you ever face with such a situation? What do you think about this?

Hi talha

What window size of FFT do you use to get PSD? whole 30 seconds?

As far as I know, hardware stimulus for example through LED rather than LCD monitors, works better. Also I read that you can get SSVEP potentials even up to 70 Hz, but best (1st harmonic) frequencies are between 6 Hz and 15 Hz.

I agree with Arthur’s answer.. If your window is 10+ seconds, the frequency should be easy to detect – especially when you use hardware. The challenge in ssvep is using as small windows as possible to obtain 90%+ accuracies.

When I work with 2 second window using only psd, I can classificy stimulus easily even high frequencies.

Hi Talha,

I am preparing a paper about a novel SSVEP detection algorithm. I need more dataset for analysis. Can you share your data with me? I will refer your work for sure.

Thanks

why we use Psychtoolbox or Cogent instead of basic timer?

you can use whatever tools you like. If you like basic timers, and they’re accurate, just stick with them

I am trying to detect peak frequencies using simulink blocks and when frequency detect so, assign leds blink on particular frequency.

Like when peak identify on 8Hz LED1 blink other remains off.

I use signal from workspace block to take Data.EEG and apply fft,But i have problems.

Can any one have any good suggestion?

If you are problems using simulink with the data, I suggest you ask on matlab newsgroup 🙂

If the problem is with the data itself or the way it looks after FFT, you need to be more specific. It’s a bit easier to help when knowing what the problem is 🙂

Hi Adnan

first thank you for data

Can you help in how can I build an SSVEP stimulus using c++ or c#?

Hi Benabdallah,

Unfortunately, I cannot help with the implementation. For C++, you will probably need to look into OpenCV and OpenGL for making the flickering work. The tricky part is presenting the whole flicking object at once – the first thing you need to look into is “double buffering”.

In C#, you can use all preexisting libraries, and the flickering will be much easier to present than in C++. Unfortunately, the flickering speed will heavily depend on how well you structure your code and it can be very difficult to optimize the code so the flickering happens at millisecond accuracy.

In either case, I recommend looking for preexisting code somewhere and asking for assistance on stackoverflow.com

Hi Adnan:

thanks for your Database , I tested and I have greats results. But, can you said me, what BCI device did you use to record the data?

Hi Fred,

Great to hear. The BCI device is a 2006 model of g.USBamp from g.tec(http://gtec.at)

Hello Sir,

Thanks a million for the database and for the splendid support you do for young researchers like me. Please let me know why do we choose the stimulation frequencies in ascending order? Can we not choose in a random manner? or in a descending order? Also, will the magnitude of the spike depend only on the performance of the stimulus presenting paradigm? what are the factors that can affect the magnitude of the spikes in the power spectrum? Anticipating you to shower light on my ignorance at the soonest.

Dear Ajitha,

Thank you for the kind words. You can definitely go with random ordering. I did it mainly to test the impact of neighboring flickering squares (e.g. will it be easier to see 6 when 6.5 is near than 9.3 when 8.2 is near).

The magnitude of the spike is primarily dependent on the stimulus, and secondary on the subject’s ability to focus on the target. If you observe your subjects, you may see that some will move their eyes slightly while looking at the target, which will still result in a spike but possibly not as high. You can monitor EOG activity if you would like to quantify this part.

In my, so far around 30 test subjects, I did not observe that vision impairment affected the magnitude as long as it was corrected with glasses.

Hi Adnan

Thanks for your useful data 🙂

I’m trying to calculate the Minimum Square Error between SSVEP and reference sine signals(sine signals with stimulation-related frequency ) as feature,similar this paper:”Total Design of an FPGA-Based Brain–Computer Interface Control Hospital Bed Nursing System by Kuo-Kai Shyu….”.

but after filtering and averaging I find a phase shift in ssvep which cause a wrong square error with its related reference sine.do u know how can i solve this problem?

Hi Nasrin,

Averaging shouldn’t affect phase shift as long as the window size is an odd number and the manipulated value is in the center. The phase shifting is usually caused when you apply a filter in only one direction. To avoid it, you need to apply the filter in both directions of the signal (forward and reverse).

MATLAB has a built-in function for this called filtfilt, which you should look into even if you dont use MATLAB 🙂

Hello Sir,

Thank you for providing the dataset, it is very useful. I am currently using the dataset for my undergraduate final-year project for studying SSVEP characteristics. I shall give proper credit in the project report.

If I may ask, I am currently using FFT (using SciPy) to analyze the frequencies. Is it possible for the detected peak frequency to change depending on the windowing and the number of FFT points? Thank you.

Hey,

Absolutely, most natural measurements are normally distributed. The actual frequency may be 9.2hz but due to varying parameters, the measurement can be slightly off. For SSVEP, it can be due to eye movements/blinking/closed that affect firing rate to the occipital lobe, general eye performance of the subject, underperformance by the stimulus (e.g. monitor not switching color fast enough for whatever reason) etc..

How accurately you hit your target frequency will depend on how clear the signal was recorded during your window – so a bigger window does not necessarily result in a more accurate reading.

Example: If you force your subject to stare at stimulus with eyes wide open without any movements for 100 secs, the subjects eyes will probably be irritated…if unlucky, eye lids may even be shaking and tears may start to develop (tons of noise)…. FFT on the whole window will give you a much worse estimate of the frequency than for example 10 windows of 10 seconds (where you will realize that the 2-3 last windows should just be discarded due to too much noise).

I see, thank you for the enlightenment Sir.

If I may ask another question, how long in general should the subject be exposed to one stimulus for ‘good’ result? I’m trying to record some data on my own (8-10 seconds for each stimulus) and only half of the resulting signals have ‘correct’ frequencies detected so far, so I’m wondering if it the stimulus exposure duration may be too short.

It depends on the accuracy and light intensity of the stimulus. If your stimulus were LED lights running on a fast hardware board, 0.5-1 second would be enough. In my own system (pc connected to 120hz monitor), I look at mostly 2 seconds, but up to 6 seconds, if it’s hard to get a “good” result for a subject.

When I had the same problem as you, I decided to test my stimulus by checking that it is actually switching between colors at the right times. Partly because I could see that when i stared at the flickering, sometimes it felt like it suddenly switched pattern. Printing out text when the light switches always gave correct times, but when i placed a light sensor over a flickering object on the monitor i saw that sometimes the switching messed up – which is because the monitor/graphics card couldn’t always keep up with my application.

Below is an example of what it looked like before I realized my implementation was flawed (notice the area right before 0.3 is shorter). To produce the figure, I connected a light sensor to a data acquisition device and placed it over a flickering object in my stimulus.

Hello Adnan,

I would like how do you want to cite you in some paper… I’m using your database for “proof concept” of my code writen in MNE-Python in my thesis.

Thank you for your job!

Hey Rodrigo,

Congratulations on completing your thesis, and best of luck with the paper 🙂

I’m glad to hear that the dataset was useful to you. I believe the IEEE standard for referencing online material is:

“A. Vilic (2014). AVI SSVEP Dataset [Online]. Available http://setzner.com/avi-ssvep-dataset” (without clickable link)

Also, I would like to know if exists some copyright or some kind of licence to write about.

It’s included in terms of use on page 3 of the pdf 🙂 as long as it’s for non-commercial use and cited, it’s fine.

Hi, thank you very much for the database

I tried different databases for my thesis and this one is one of the best. My supervisor wants me to compare my results with different publications on the same dataset. Do you know about any results obtained from this dataset, or do you have any yourself?

Once again, thank you very much.

Dear Filip,

I’m glad it’s useful to you, and thank you for the kind words. Best of luck and – more importantly – have fun with your thesis 🙂

I have lost my access to publications, so I can’t access scientific papers directly, but from a google scholar search, i found that these papers are referencing the dataset – determining their quality is up to you 🙂

[1] Demir, A. F., Arslan, H., & Uysal, I. (2016, February). Bio-inspired filter banks for SSVEP-based brain-computer interfaces. In Biomedical and Health Informatics (BHI), 2016 IEEE-EMBS International Conference on (pp. 144-147). IEEE.

[2] Anindya, S. F., Rachmat, H. H., & Sutjiredjeki, E. (2016, October). A prototype of SSVEP-based BCI for home appliances control. In Biomedical Engineering (IBIOMED), International Conference on (pp. 1-6). IEEE.

[3] Sheykhivand, S., Rezaii, T. Y., Saatlo, A. N., & Romooz, N. Comparison Between Different Methods of Feature Extraction in BCI Systems Based on SSVEP.

[4] Dilshad, Azhar, et al. “On the Development of a Novel, Plug and Play SSVEP-EEG based General Purpose Human-Computer Interaction Device.” Asian Journal of Engineering, Sciences and Technology (2016): 1

[5] Anindya, S. F., Sutjiredjeki, E., & Rachmat, H. H. Perancangan dan Aplikasi Filter Digital untuk Pemrosesan dan Pengamatan Karakteristik Sinyal EEG-SSVEP.

Thank you very much for the fast response, for some reason i didnt get an email notifying me you responded to my comment even though i subscribed, thats why i found it just now.

hi,

I used fft for feature extraction. I need to find fundamental target frequency. When the subject seeing the target, the maximum amplitude should appear at the corresponding target frequency, why In some of the datasets maximum amplitude occurs in harmonic frequency. Help me to find out the fundamental target frequency.

I tried to calculate fundamental frequency by using fft then finding the frequency corresponds to maximum amplitude.

Other method is limiting the sample size it takes more time to check when fundamental target frequency occurs.

Hi Maha,

It is very common that some subjects have higher amplitudes in harmonics than fundamental target frequency. It is normal, but I have not met anyone who knows why this occurs.

In terms of finding the fundamental frequency, as you mention: the more samples you have, the better results you will get with simple fft. If you want to use fewer samples, there are thousands of articles on this topic, and I think you should read some of those and find the approach that best suit your needs. https://scholar.google.com/scholar?q=ssvep

hi,

You designed the target with certain dimensions. Is there any references and reasons you have for that dimensions or it is based on designer.

I used fft,CCA,cross correlation for frequency identification. In some of the database i got wrong result (for 6.5hz target freq am get 6 , 6.5hz -7hz 7.5hz-7hz). multi_sub1_2(6,8,9) multi_sub3_2(6,7,9,10). I got the exact results for remaining database thanks for that. Is this error due to my coding or in the database help me to find the solution.

Hi

The goal is to allow only one target to be covered by the fovea centralis at a time, so that flickering frequencies do not overlap. To calculate the size, see Page 215 in MEG-EEG Primer (see link below). In my case, the distance from screen was 60cm which gave an angle of 1.7 degrees, resulting in target size of 1.77cm

There could be many reasons why you misclassify. It could be your code, but it could also be that second/third harmonics just reacted better there or that the person was not focused when looking. The easiest way is to look at the raw data and compare the data in each step of processing to see when/where misclasssification happens

https://books.google.dk/books?id=cCpdDgAAQBAJ&pg=PA215&lpg=PA215&dq=trigonometry++fovea&source=bl&ots=6mySePVeRE&sig=yjJM7O0jTPgKK5vMKUZsQwy9qz0&hl=da&sa=X&ved=2ahUKEwjcgPLmvOHeAhVKhaYKHcurAgoQ6AEwDXoECAQQAQ#v=onepage&q=trigonometry%20%20fovea&f=false

Hi Adnan,

What do you think of stimulus frequencies less than 4 Hz? I’m interested in looking for such a “low” frequency response but throughout the literature, I see the target frequencies higher than 4 Hz. Is there any particular reason?

Thank you.

-Velu

Hi Adnan,

Thanks for sharing your experience with us.

I’ve recently started working in SSVEP data and I noticed that the target frequencies start around 5 Hz. Could the target frequencies be in the range [0.5, 4] Hz? I am trying to reason out why such “low” frequencies have not been used in the literature so far.

Sincerely,

Velu

Hi Velu,

SSVEP have frequencies over 3-4Hz whereas “transient VEP” have frequencies slower than that. Fast frequencies are preferred, because we wish to make systems which quickly react to the users input. Imagine using 10hz vs 2hz stimuli. If you record EEG for 2 seconds, you’ll have 10hz x 2sec = 20 oscillations (ups and downs), whereas you’ll only have 2hz *2 sec =4 oscillations (ups and downs) in the signal for transient vep. So to get equally much information, you’ll need to record five times longer with 2Hz. In addition, the user will also cause more artifacts because of blinking and moving. So while slow frequencies are more pleasant to look at, there will be much less information to process as to whether the user is actually looking at the stimuli.

Hi Adnan,

Thanks for your prompt reply.

I worked in artifacts removal for the previous months and now I am back to SSVEP analysis. I downloaded your datasets. I ran CCA algorithm (an alternate to FFT) and I find the stimulus frequencies for the four “Single” datasets as follows. I appreciate if you could confirm the same.

Sub1 – 8.2 Hz

Sub2 – 10.0 Hz

Sub3 – 10.0 Hz

Sub4 – 8.2 Hz

Thank you once again!

-Velu

Hi Velu,

Glad to hear you’re advancing 🙂

The data per subject are not one continous stream. Instead, each subject has looked at one target per trial for some number of trials (see files “Sub{X}_singletarget_Target.{mat/dat}”)

Sub1 has 27 targets/trials, (if you are interested in 8.2hz, the target indices are: 22,23,24)

Sub2 has 26 targets/trials, (if you are interested in 10hz, the target indices are: 1,2,3)

Sub3 has 21 targets/trials, (if you are interested in 10hz, the target indices are: 1,2,3)

Sub4 has 21 targets/trials, (if you are interested in 8.2hz, the target indices are: 16,17,18)

Hi Adnan

thanks for sharing your experience with us.

We are making our final project of school about the SSVEP, but I have a questions we already have the power spectral density graphic. But now we have a big problem, we have to make extraction characteristics for identify a SSVEP. Can you help telling us about what characteristic we can take to identify that, we already have one that is the centroid. But we need at least 4 or 5 more.

Thank you

Hi Maria,

Sorry for the late reply, and I hope it’s not too late.

Characteristics that come to mind:

* ratio difference between spikes most prominent spikes

* area under the curve for spikes

* width of spikes

* combinations of spikes with their harmonics

Hi Adnan,

I tried to apply a CCA code found on(https://www.mathworks.com/matlabcentral/fileexchange/47499-msetccaforssvepbci_demo-zip) your dataset and started from “Sub1_1_multitarget.mat”. I tried to customize the code to adhere to the same dataset you have build, but im not able to proceed and get error.

Can you kindly look into what do i wrong?

% Demo of Msetcanoncorr for four-class SSVEP Recognition in BCI %

% by Yu Zhang, ECUST, 2013.6.12

% Email: zhangyu0112@gmail.com

clc;

clear all;

close all;

%% Initialize parameters

Fs=512; % sampling rate

load Sub1_1_multitarget;

signal = Data.EEG(:,1);

%t_length=4; % data length (4 s)

t_length=length(signal);

TW=1:1:t_length;

TW_p=round(TW*Fs);

n_run=10; % number of used runs

sti_f=[10.00 9.30 8.20 7.50 7.00 6.50 6.00]; % stimulus frequencies 10, 9, 8, 6 Hz

n_sti=length(sti_f); % number of stimulus frequencies

n_correct=zeros(2,length(TW));

% 8 channels x 1000 points x 20 trials x 4 stimulus frequencies

%% canoncorr for SSVEP recognition

% Construct reference signals of sine-cosine waves

N=2; % number of harmonics

ref1=refsig(sti_f(1),Fs,t_length*Fs,N);

ref2=refsig(sti_f(2),Fs,t_length*Fs,N);

ref3=refsig(sti_f(3),Fs,t_length*Fs,N);

ref4=refsig(sti_f(4),Fs,t_length*Fs,N);

% Recognition

for run=1:10

for tw_length=1:4 % time window length: 1s:1s:4s

fprintf(‘canoncorr Processing… TW %fs, No.crossvalidation %d \n’,TW(tw_length),run);

for j=1:4

[wx1,wy1,r1]=canoncorr(Sub1_1_multitarget(:,1:TW_p(tw_length),run,j),ref1(:,1:TW_p(tw_length)));

[wx2,wy2,r2]=canoncorr(Sub1_1_multitarget(:,1:TW_p(tw_length),run,j),ref2(:,1:TW_p(tw_length)));

[wx3,wy3,r3]=canoncorr(Sub1_1_multitarget(:,1:TW_p(tw_length),run,j),ref3(:,1:TW_p(tw_length)));

[wx4,wy4,r4]=canoncorr(Sub1_1_multitarget(:,1:TW_p(tw_length),run,j),ref4(:,1:TW_p(tw_length)));

[v,idx]=max([max(r1),max(r2),max(r3),max(r4)]);

if idx==j

n_correct(1,tw_length)=n_correct(1,tw_length)+1;

end

end

end

end

%% Plot accuracy

accuracy=100*n_correct/n_sti/n_run;

col={‘b-*’,’r-o’};

for mth=1:2

plot(TW,accuracy(mth,:),col{mth},’LineWidth’,1);

hold on;

end

xlabel(‘Time window length (s)’);

ylabel(‘Accuracy (%)’);

grid;

xlim([0.75 4.25]);

ylim([0 100]);

set(gca,’xtick’,1:4,’xticklabel’,1:4);

title(‘\bf Msetcanoncorr vs canoncorr for SSVEP Recognition’);

h=legend({‘canoncorr’,’Msetcanoncorr’});

set(h,’Location’,’SouthEast’);

Hi Bayar,

Unfortunately I no longer have a version of MATLAB so I cannot test your code. By looking at your code my best advice though is to split your code up into more variables and verify that each statement actually performes what you think it does. I.e. define variables to make it easier to debug step by step and see where it fails.

“TW_p(tw_length)” or

Sub1_1_multitarget(:,1:TW_p(tw_length),run,j)

Thank you for replying to me, I tried to do so, but i was unlucky.

Hi Adnan,

Thanks for your helpful info

I have a question about creating a stimulus on the LCD and I was confused :). Which software is better for it? PsychoPy, Psychotoolbox or others?

Sincerely,

MohammadReza

Hi Mohammad,

The stimulus precision (how precisely the black-white pattern repeats itself – note that “accuracy” and “precision” mean different things) will directly influence your performance results. The less precise your flickering is, the longer the flickering period has to be for you to see SSVEP.

Psychotoolbox mentions “We are aware that many people do run the toolbox under Vista or Windows-7 and we didn’t receive many reports of trouble so far, but we can’t recommend it at all for dual-display stereo stimulus presentation or for tasks with a need for high visual timing precision.”.

I dont know if psychopy is better, but it’s worth giving it a try. Keep also in mind that, the less stuff PC is running in background, the more it is just focusing on the flickering task. i.e. if you are running your stimulus, an outlook pops up with a new mail, the stimulus will slow down from the time the popup appears, until it goes away. So make sure to have as few software distractions as possible.

Best regards,

Adnan